Search engine optimization is a lot like building a house:

- Technical SEO is like the foundation

- On-page SEO is like the wood framing of the house

- Off-page SEO is like the finished work (paint, cabinets, flooring, fixtures, etc.)

Remove the foundation, and the entire house crumbles to the ground.

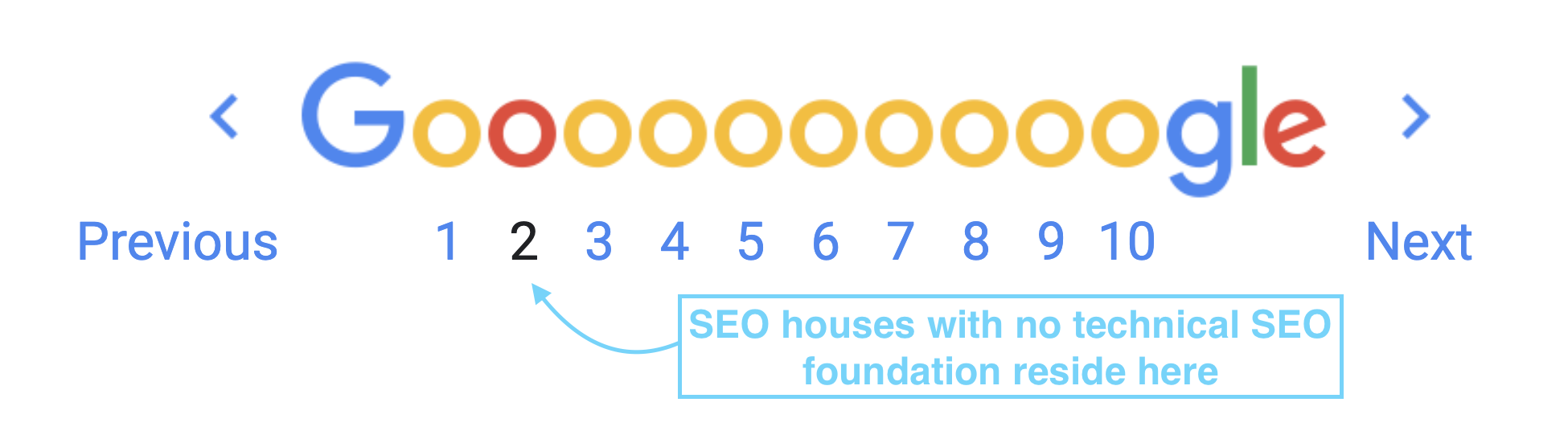

This is what a house with no foundation looks like:

This is what an SEO house with no technical foundation looks like:

Nobody wants to live on 2nd Street of Google (if you know what I mean).

Which is why we’re going to teach you how to build a technical SEO foundation so reliable and sturdy that even a category five SEO hurricane (AKA Google algorithm update) can’t take your house down.

1st Street, here we come.

Get brand new SEO strategies straight to your inbox every week. 23,739 people already are!Sign Me Up

What is technical SEO?

Technical SEO refers to optimizing the technical aspects of your website so search engine spiders can better crawl/index it and ensure it’s secure for visitors.

For example, server configuration, page speed, mobile-friendliness, and SSL security all help search engines crawl and index your website better and faster so you can rank higher, whether directly or indirectly.

Technical SEO also helps improve the user experience for your visitors. For example, a faster, more mobile responsive page makes for easier navigation. And what’s good for people is good for Google.

Technical SEO includes the following core factors:

- Crawlability

- Indexation

- Page speed

- Mobile-friendliness

- Site security (SSL)

- Structured data

- Duplicate content

- Site architecture

- Sitemaps

- URL structure

Technical SEO vs. on-page SEO

Like technical SEO, on-page optimization refers to the optimization of different elements within your site (as opposed to off-page optimization that optimizes elements off site).

However, on-page SEO takes a page-by-page approach to optimizing content, whereas technical SEO takes a sitewide approach to optimizing the backend of your website.

Think of technical SEO like working on the engine of a car (under the hood) and on-page SEO like working on the body.

Why is technical SEO important?

Bottom line: without a sound technical foundation, the greatest content in the world won’t find the first page of search results.

Factors like page speed, website security, and mobile usability are direct ranking factors, which means slower websites without SSL security or mobile-responsiveness will rank lower than competitors (all other parts being equal). Google’s rules, not ours.

And if your website gets fewer pages crawled less often, you limit the amount of pages that can appear in search to begin with.

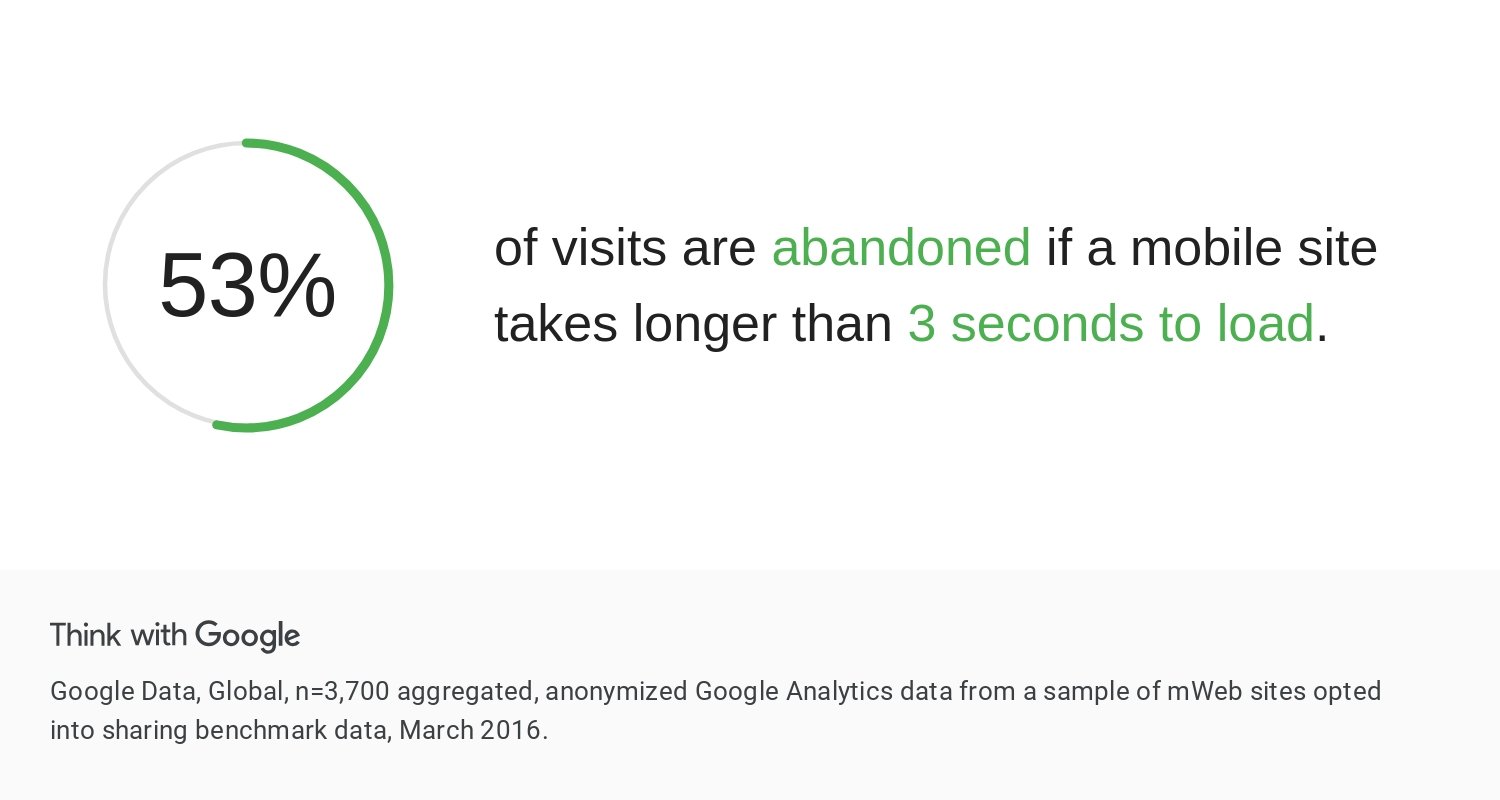

Then there’s your website visitors. The average website visitor expects a mobile page to load in 1-2 seconds, and if it doesn’t, 53% of your traffic will leave immediately.

Technical SEO builds a solid foundation for crawlability, indexation, security, and usability, all of which are prerequisites for ranking.

Technical SEO checklist

Nothing in SEO is as easy as checking a box on a list anymore. Not even close.

But with technical SEO, each responsibility falls within one of four categories:

- Site hierarchy

- Crawl, index, render

- Security

- Usability

1. Website hierarchy

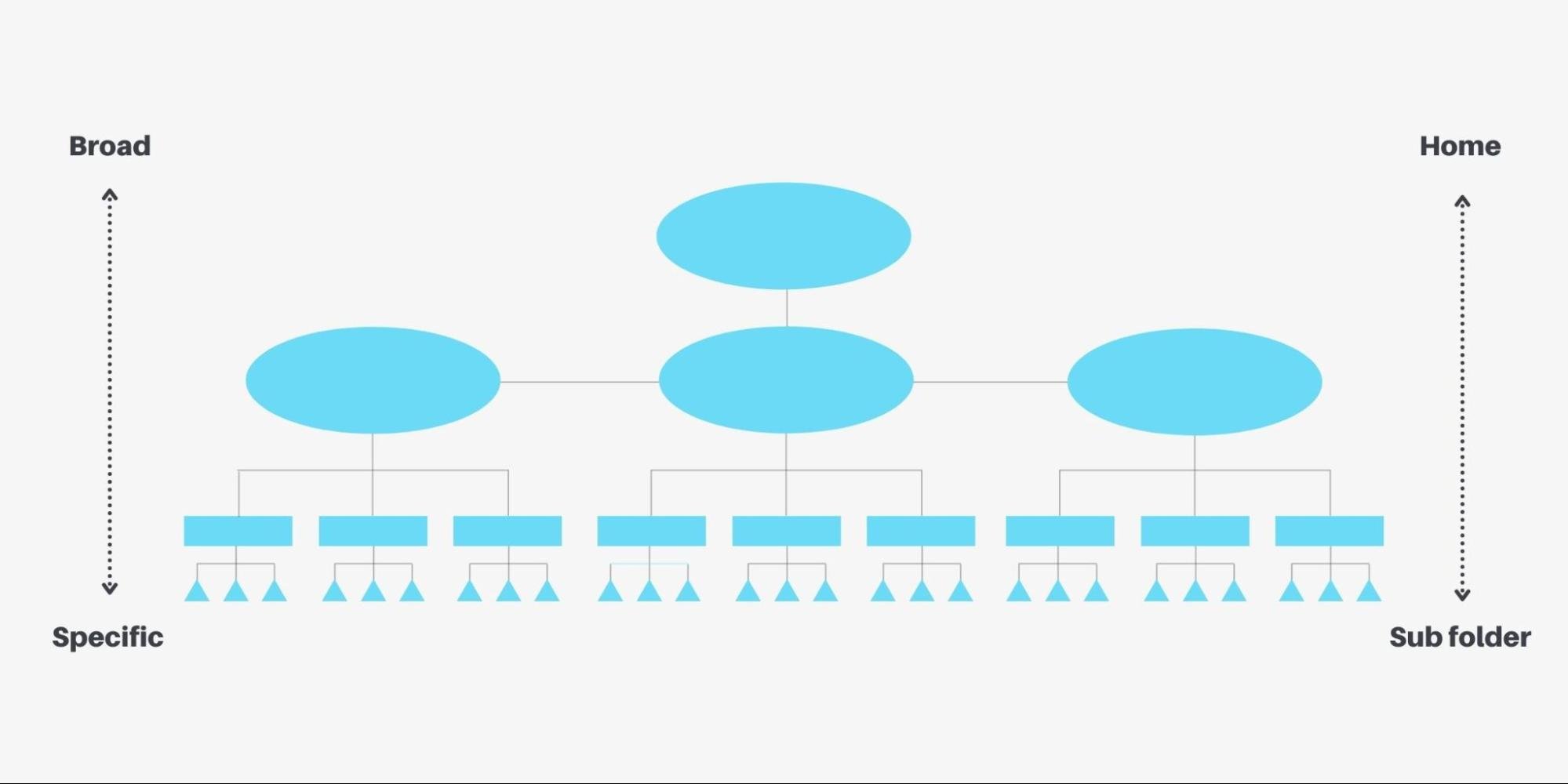

Website structure (or architecture) refers to the hierarchical organization of your website, from general (homepage) to specific (blog post).

Visitors rely on a well-organized website hierarchy to find what they’re looking for quickly. And Google relies on a well-organized website hierarchy to understand which pages you think are most important and which pages relate to others.

In the words of Google, when it comes to site structure, hede the following:

“Make it as easy as possible for users to go from general content to the more specific content they want on your site. Add navigation pages when it makes sense and effectively work these into your internal link structure. Make sure all of the pages on your site are reachable through links, and that they don't require an internal search functionality to be found. Link to related pages, where appropriate, to allow users to discover similar content.”

—Google

How do you ensure your technical SEO specialist hits a site architecture homerun?

- Flat structure

- Breadcrumb navigation

- XML sitemap

- URL structure

Flat structure

Your website should adopt a flat hierarchy, meaning that any page on your website should only be 1-3 clicks away from the home page. Like this:

You can (and should) make your website easily navigable using a hierarchical navigation menu. But Google suggests that visitors or search engines should be able to find every page on your website by following internal links within the content alone:

“In general I’d be careful to avoid setting up a situation where normal website navigation doesn’t work. So we should be able to crawl from one URL to any other URL on your website just by following the links on the page.”

—Google’s, John Mueller

Let’s look at a real-world example of a flat hierarchy that uses internal linking to connect pages (our SEO category page):

Our SEO guide functions as a category page that keeps our most important pages two clicks from the home page.

Within each of these respective articles, we also internally link to the other articles in the same chapter, ensuring that our structure groups related content with the same family.

If Google can only find certain pages by looking at our sitemap (and not by following internal links), not only does it make it harder for them to crawl and index pages, but it makes it impossible for them to understand how relevant a page is in relation to other pages. And if Google can’t tell how important or relevant you think a page is, they’re not going to show it to searchers.

Breadcrumb navigation

Remember the story of Hansel and Gretal?

Ya know, the one where they leave a trail of breadcrumbs behind so they can find their way home?

Well breadcrumb navigation (inspired by the story) uses the same approach to help website visitors easily identify where they are on your website so they can find their way back to your homepage.

For example, this is what the breadcrumb navigation looks like on our blog posts:

Without breadcrumb navigation, if a visitor enters our website by finding our keyword research article through search (likely), they won’t know where they are on the site.

Our breadcrumb navigation provides quick links in a hierarchical order that will take visitors back to our SEO category page.

Bonus: Since search engines also use internal links to crawl your website, breadcrumb navigation makes it easier for them to find more pages.

Implementing breadcrumb links is easy. In fact, most major content management systems (CMS) like WordPress, SquareSpace, or Wix either include breadcrumbs automatically or have a plugin that will do it for you.

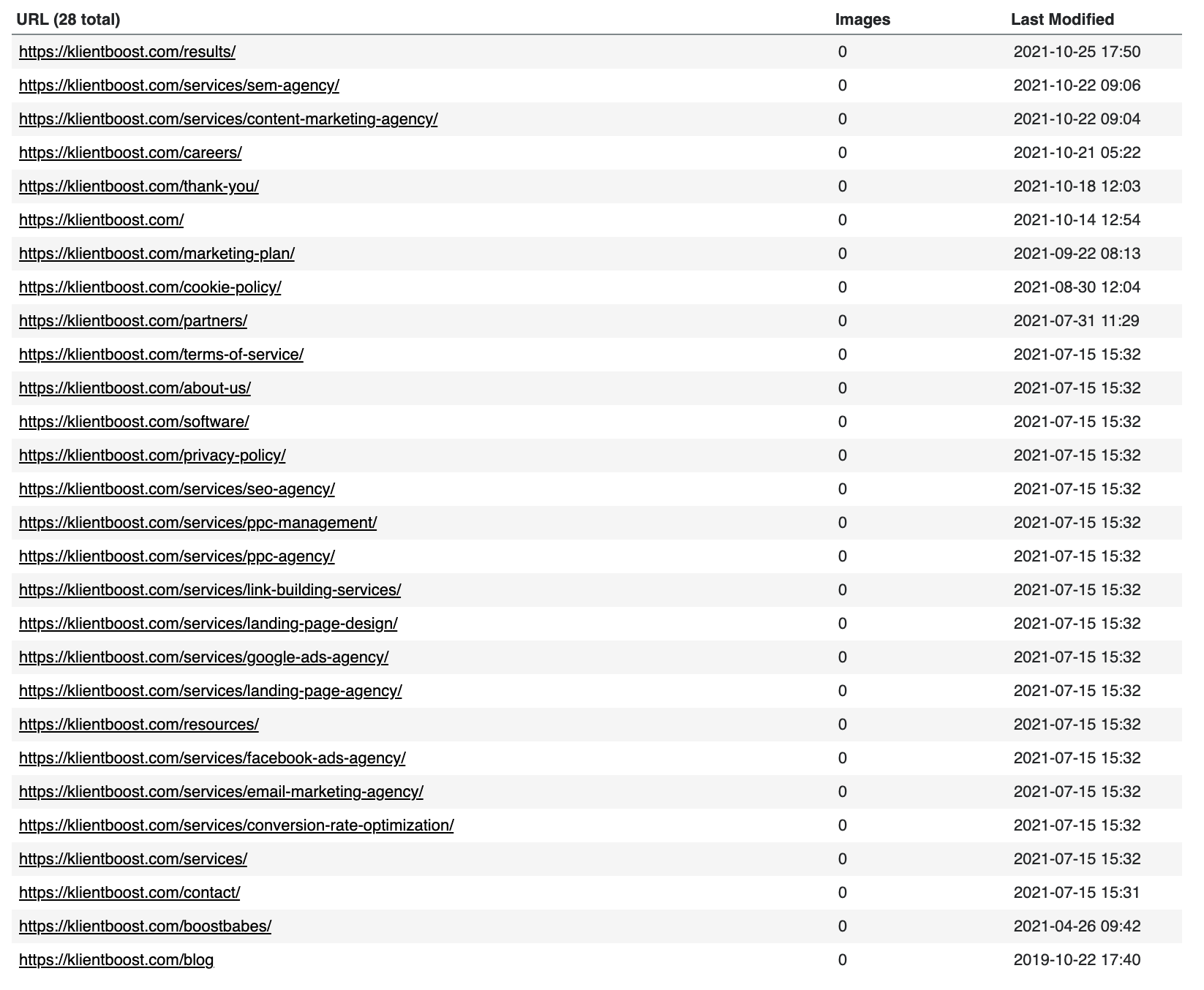

XML sitemap

An XML sitemap is a map to your website (in the form of a file) that search engines use to crawl and index your pages. Think of it as a fallback, or a secondary discovery method for search engines.

If you’ve never seen one, it looks like this:

While not necessary (search engines do a pretty good job of finding your content without it), a sitemap ensures that Google can find, crawl and index every page on your website, even ones that have no internal links or that don’t appear in the navigation.

Who needs a sitemap? According to Google, the following website should include a sitemap:

- Larger websites (100+ pages)

- New websites with few external links yet

- Websites with sections of content that have no internal links

- Sites with rich media like videos and images or news articles

Lucky for you, we wrote an entire article on creating and optimizing a sitemap: How to Create and Optimize an SEO Sitemap.

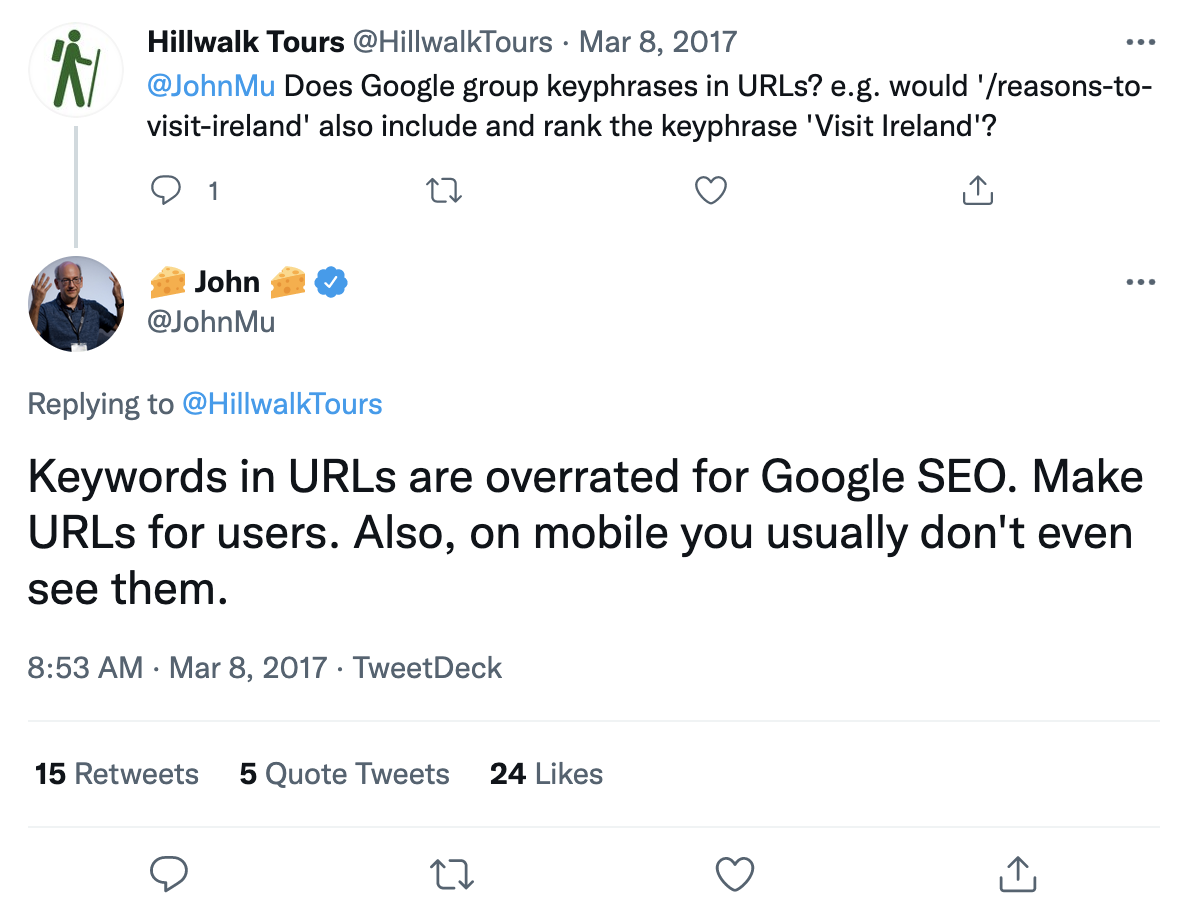

URL structure

A URL is the address to a website or web page.

In the past, SEO specialists stuffed URLs with keywords in hopes of ranking higher, but today, Google has made it clear: keywords in URLs don’t hold much weight, if any.

However, Google does use URLs to understand the relevance of your content and better crawl your website.

Plus, using the proper URL syntax can help website visitors easily identify their place on your website and understand what a page is about.

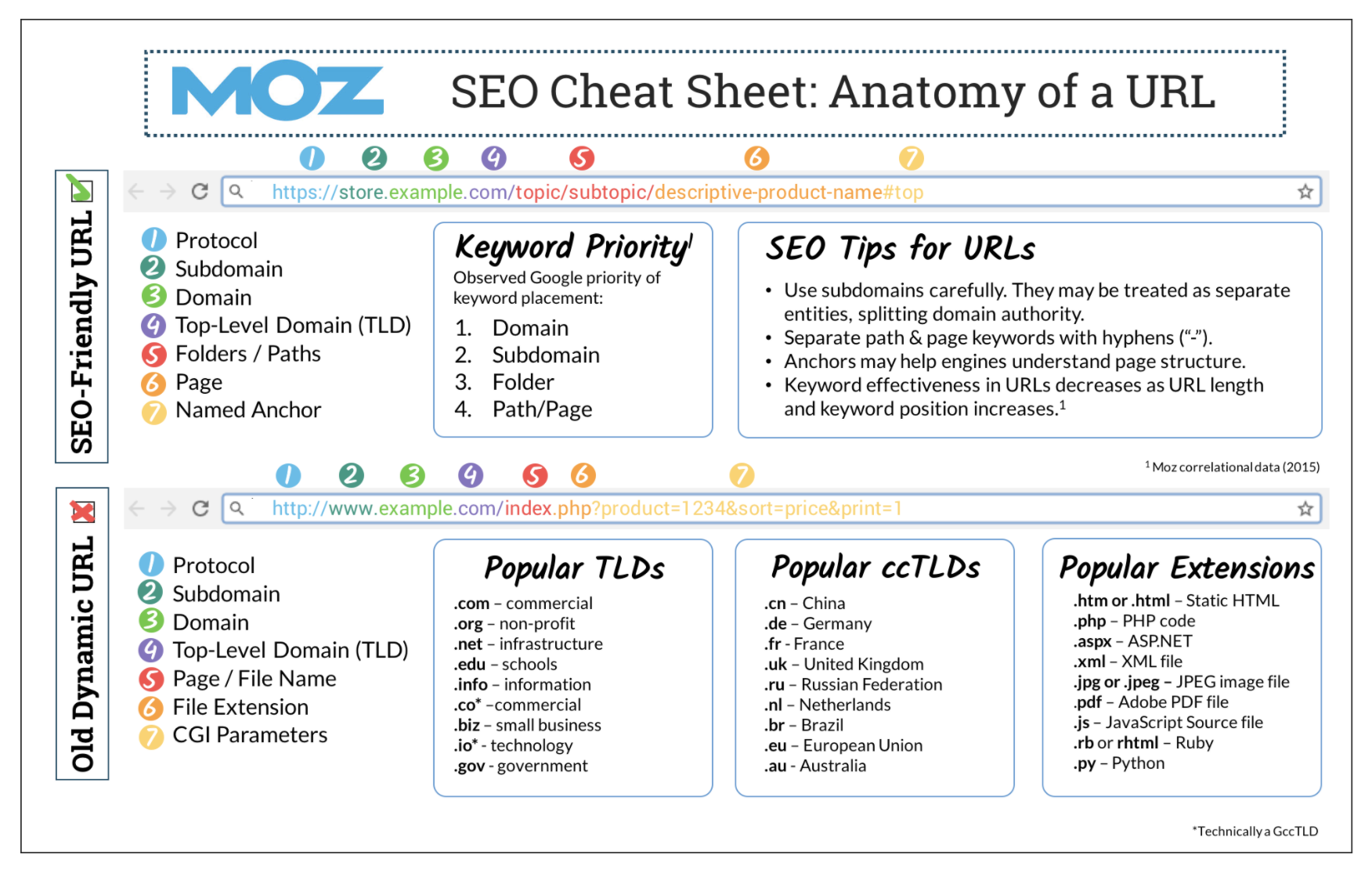

What does a good URL look like?

Let’s look at Moz’s handy cheat sheet:

According to Google, proper URL structure should look clean, logical, and simple:

- Keep URLs simple and descriptive: avoid ID numbers or undescriptive, complex words, parameters, or numbering (e.g. don’t do this: example.com/?id_sezione=360&sid=3a5ebc944f41daa6f849f730f1)

- Keep URLs short: use short and punchy slugs, not entire titles of pages (e.g. klientboost.com/seo/duplicate-content)

- Use hyphens (not underscores) between words: hyphens help people and search engines identify concepts in the URL easier (e.g. example.com/technical-seo, not example.com/technicalseo)

- Use https:// not http://: like we mentioned earlier, secure your site with an SSL certificate

- Hide the www: it only makes URLs longer (e.g. klientboost.com not www.klientboost.com)

- Eliminate stop words: don’t use words like the, or, a, and, or an, they only make URLs longer (e.g. use example.com/seo, not example.com/this-is-an-article-about-seo)

- Remove dates from blog articles: turn (e.g. “klientboost.com/seo/01/15/19/on-page-optimization into klientboost.com/seo/on-page-optimization)

2. Crawl, index, render

Make no mistake, your site architecture is the first step to ensuring Google can properly crawl and index your website.

But the following technical SEO responsibilities take crawling and indexing a step further, and help Google render your website in search results better.

- Duplicate content

- Structured data

- Robots.txt

Duplicate content

Duplicate content is any content on your site or between another site that is identical.

Not ancillary information that you’d find sitewide in a header or footer, but large blocks of content that are exactly the same.

While duplicate content is common (and expected in many cases), it can lead to poor rankings, less organic traffic, brand cannibalization, backlink dilution, and unfriendly SERP snippets if not managed properly.

Why does duplicate content fall under technical SEO? Because most of the time, duplicate content issues arise due to technical errors like server configuration or poor canonicals.

Plus, Google awards sites with a limited crawl budget, which means that if you make them crawl unnecessary duplicate pages, you risk exhausting that crawl budget before they get to pages that need indexing.

Lucky for you (again), we wrote an entire article about finding and fixing duplicate content: Duplicate Content: How to Avoid it Using Canonical Tags.

Schema structured data

Schema.org markup is a type of structured data that search engines use to understand the meaning and context of your page.

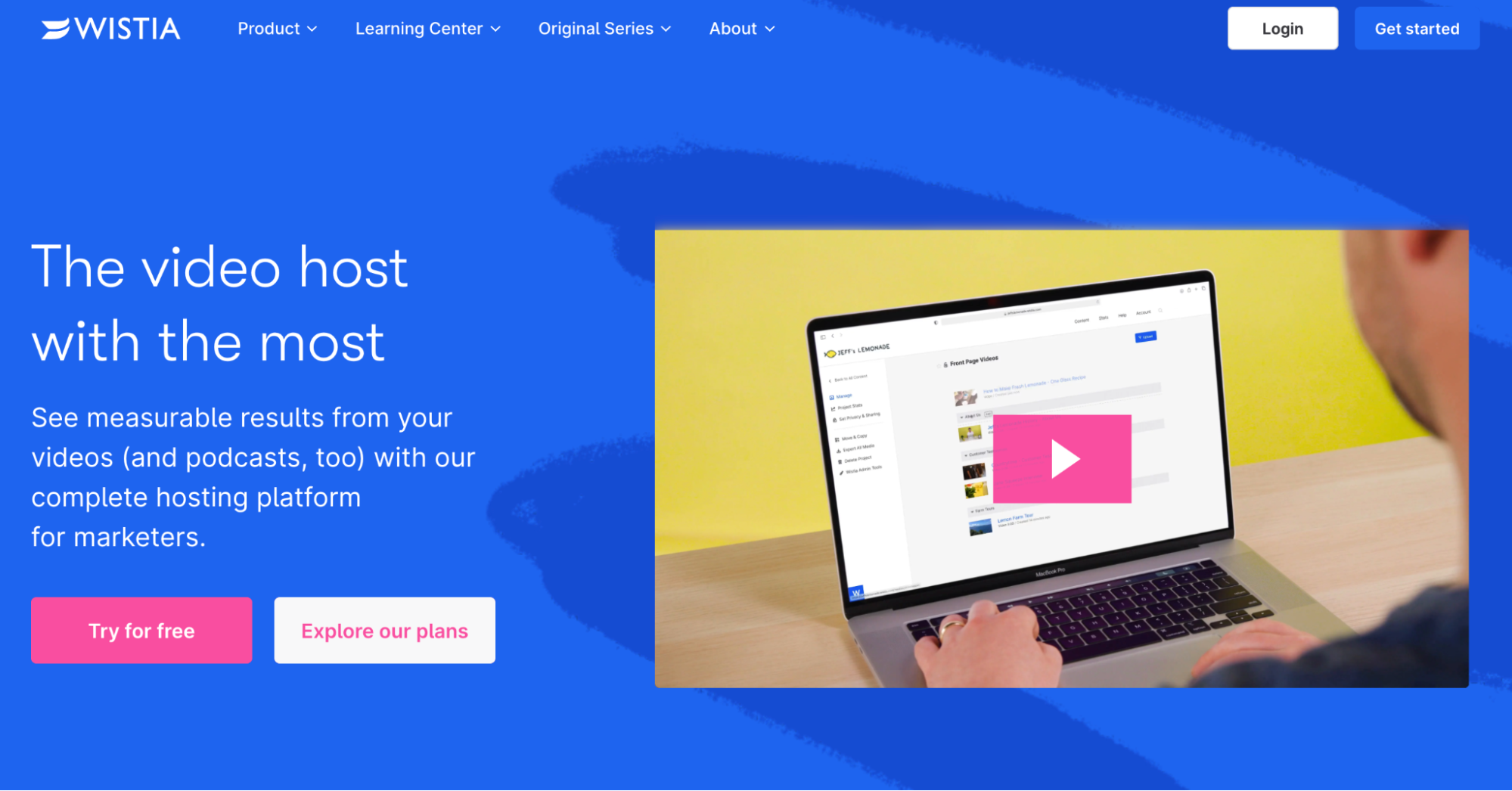

You or I can look at a page and understand the words, their meaning, and how they relate to each other. For example, if we look at the Wistia homepage, not only do we know that this is Wistia’s website (from the URL and logo), but we also know that the words and video are talking about Wistia’s video player.

For search engines, it’s much harder to make those connections, especially knowing that the video is talking about Wistia’s product.

Thankfully, with schema markup, you can tell Google explicitly that the video is talking about Wistia’s video player. No guessing.

Even better: you can tell Google how long the video is, what day it was published, where to timestamp certain sections, what the video is about, and what thumbnail to use so they can use that information within SERPs.

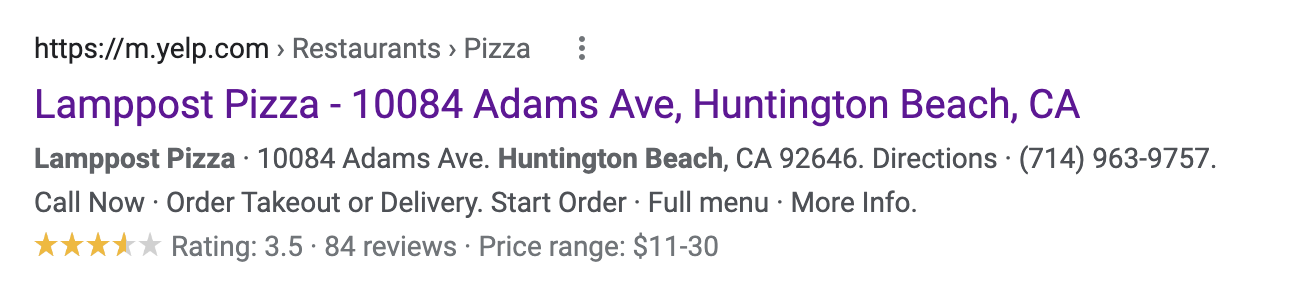

Bonus: Google also uses structured schema to populate their rich snippets.

A rich snippet is a SERP result with added information. Rich snippets have been proven to increase click-through rates. Let’s look at an example of a review rich snippet:

Lucky for you (again, again), we wrote an entire article about schema markup: Schema Markup for SEO: What it is and How to Implement It.

Robots.txt

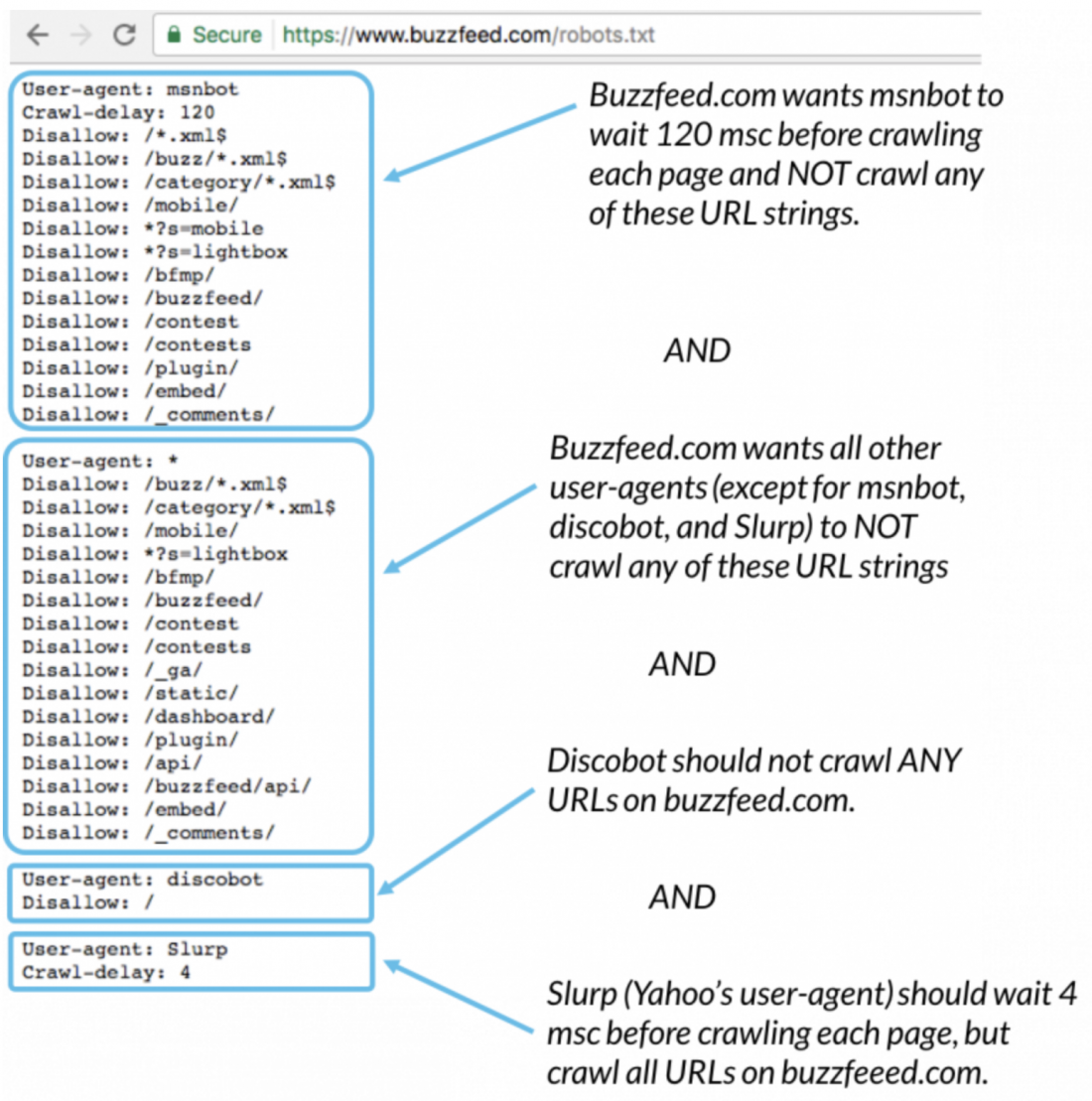

The robots.txt file is a file that lives on your website (at example.com/robots.txt) and tells any web crawler (including search engines) how to crawl the pages on your website. It’s also where you designate the link to your sitemap.

You can tell search engines (and other web crawlers) not to crawl your website or a specific page on your website. Just like you can tell them to wait x-amount of second before crawling.

For example, let’s look at Buzzfeed's robot.txt file:

When it comes to technical SEO and your robots.txt file, your main objective is to ensure Google can or can’t crawl the pages on your website.

For example, when developing a new website on a staging domain before pushing it live, you wouldn’t want Google to crawl and index it yet. But after pushing it live, you’d absolutely want Google to start crawling it.

In both cases, you would use your robots.txt file to tell Googlebot when to start crawling and indexing your website.

To do that, follow the robots.txt syntax below:

- Prevent all web crawlers from crawling your site - User-agent: * Disallow: /

- Allow all web crawlers to crawl your site - User-agent: * Allow: /

- Block specific crawlers from specific pages - User-agent: Googlebot Disallow: /seo/keywords/

Last, if you wish to discourage search engines from crawling your website at the page level (not within your robots.txt.), you can do that by using the meta=robots HTML attribute.

For example, if you didn’t want Google to crawl example.com/page-one, within the <head> section of that page, you would include the following code:

<meta name =”robots” content =”noindex/follow” />

If you also didn’t want search engines to follow the page when navigating your site, you would include “noindex/nofollow” instead.

3. Security

If visitors hand over personal information, will your website prevent a hacker from intercepting it?

You better hope so. If not, your rankings will suffer.

Good news: website security comes down to owning an SSL certificate. That’s it.

SSL certificate

SSL stands for Secure Sockets Layer, and an SSL certificate ensures the connection between your website and a browser is secure and encrypted for visitors.

That means any personally identifiable information like name, address, phone numbers and date of birth, or login credentials, credit card info, or medical records are safe and protected from hackers.

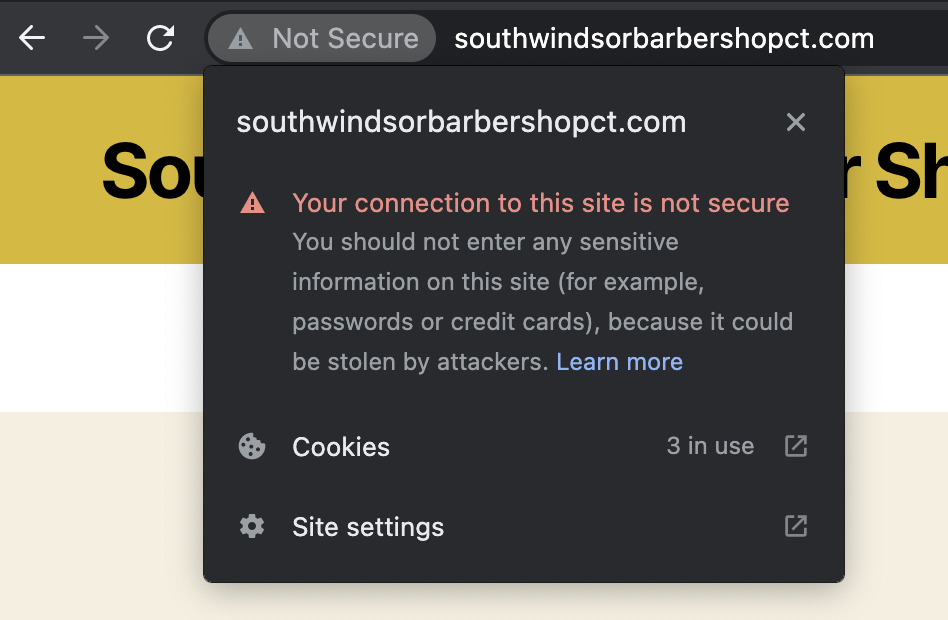

How do you know if your site has an SSL certificate?

Websites with SSL certificates use the https:// (Hypertext Transfer Protocol Secure) protocol before the URL, whereas insecure connections use the standard http:// protocol. Also, secure sites have a closed padlock icon before their URL, insecure sites don’t.

Why are SSL certificates so important, other than security?

First, Google heavily favors secure websites over non-secure websites in rankings. If you don’t have a SSL certificate, you’ll rank lower than websites that do (all other things being equal).

Second, if your site is not secure, Google will show a ”Not Secure” warning in the browser, which can quickly erode visitor trust and cost you visitors.

You can buy an SSL certificate from any web hosting provider like GoDaddy, BlueHost, Wix or SquareSpace (comes with a website), or NameCheap.

4. Usability

We’ve said this a hundred times, and we’ll say it a hundred more: what’s good for visitors is good for Google.

That’s because Google needs visitors to enjoy their search experience, otherwise they wouldn’t own 93% of the search market.

Make no mistake, website hierarchy and safe and secure encryption also benefit the user experience, but Google wants every technical SEO specialist to pay attention to page speed and mobile friendliness, as both of which are explicit ranking factors in Google’s algorithm.

- Page speed (site speed)

- Mobile friendliness

- Core Web Vitals

Page speed

Faster websites rank higher and sell more, period.

Google has stated that page speed is a direct ranking factor, which means that faster sites will outrank slower sites (all other things being equal).

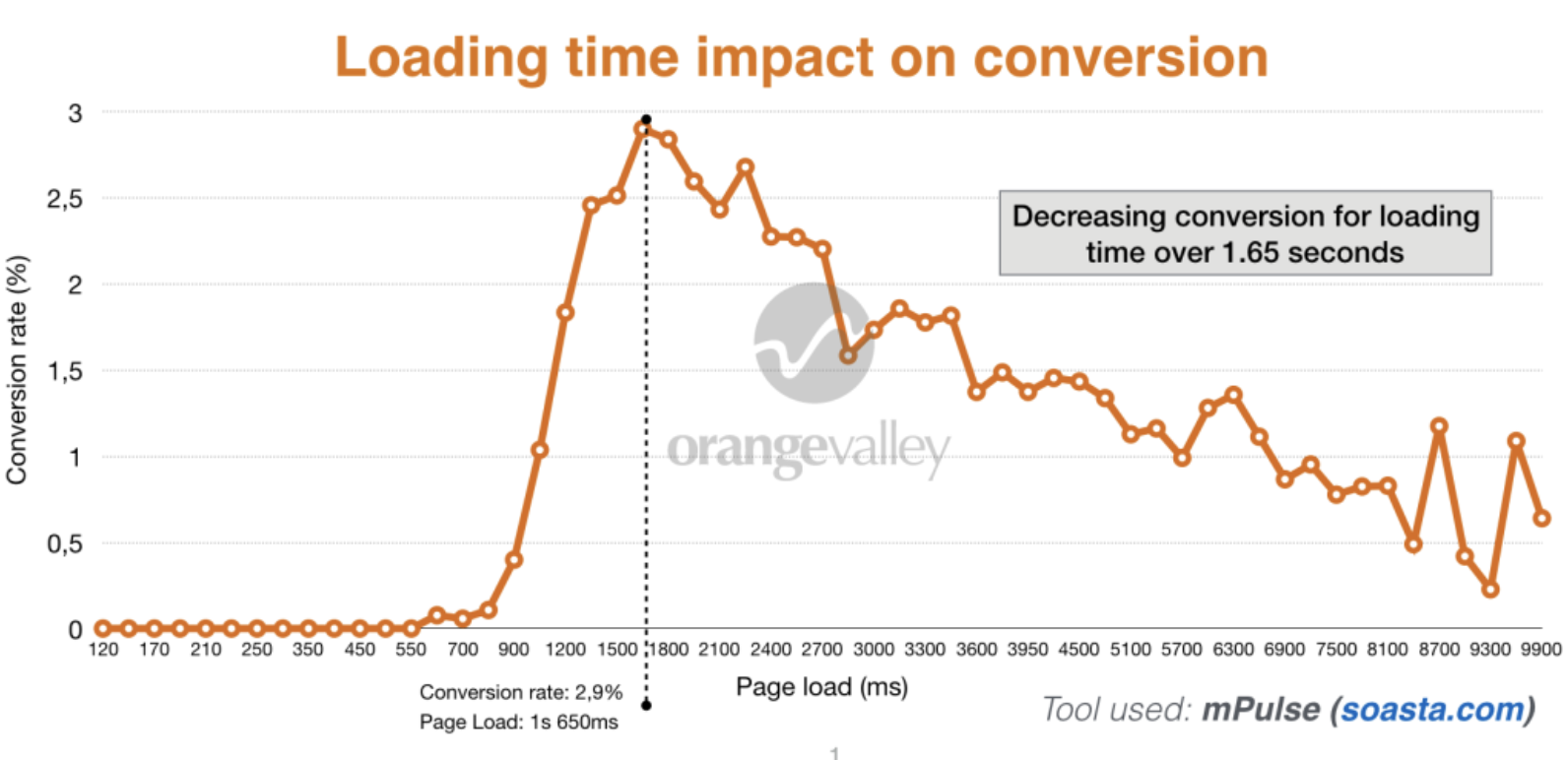

And slower websites convert significantly less traffic:

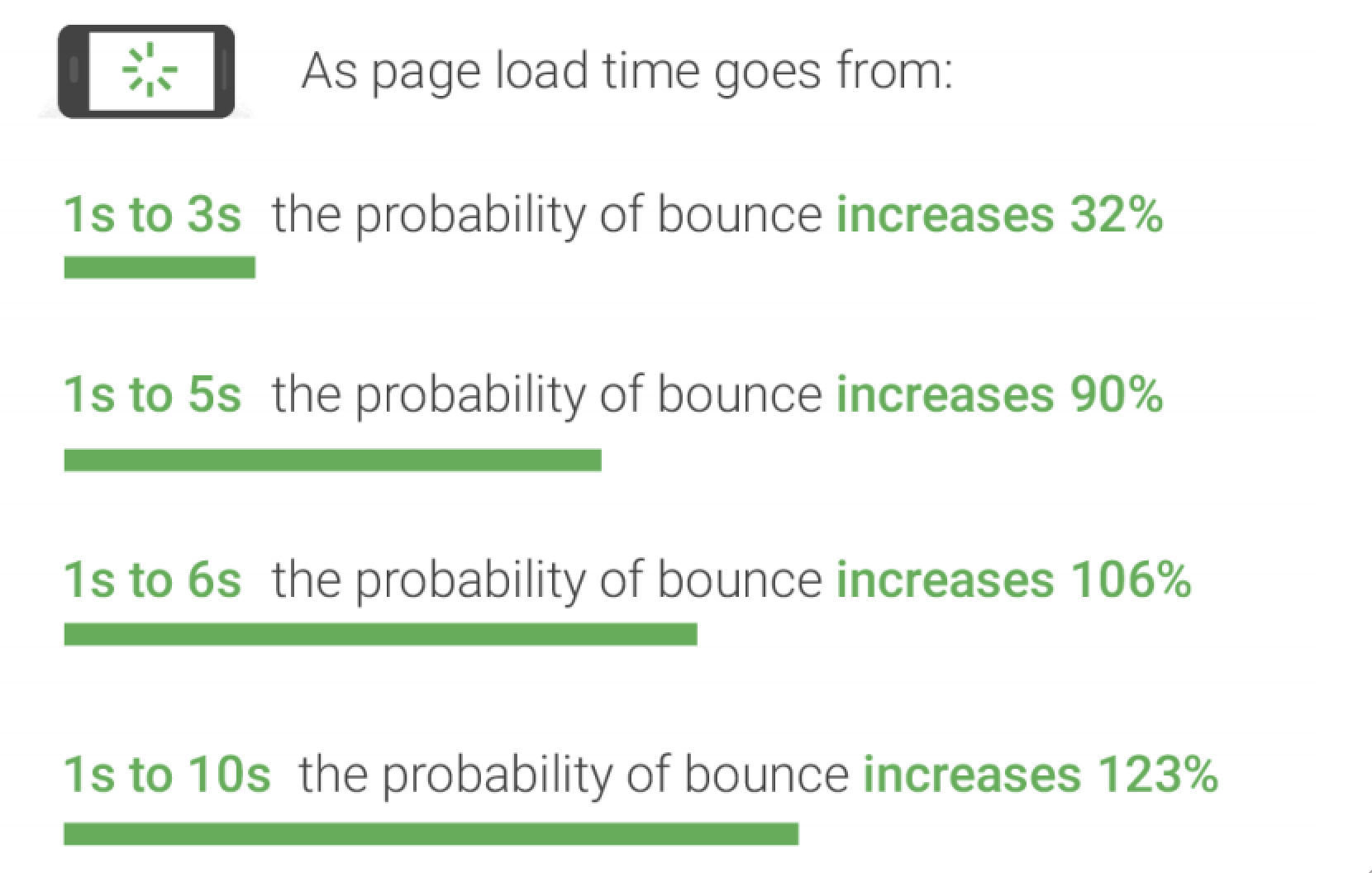

Why do slower pages convert less? Because people don’t have the patience to stick around and wait:

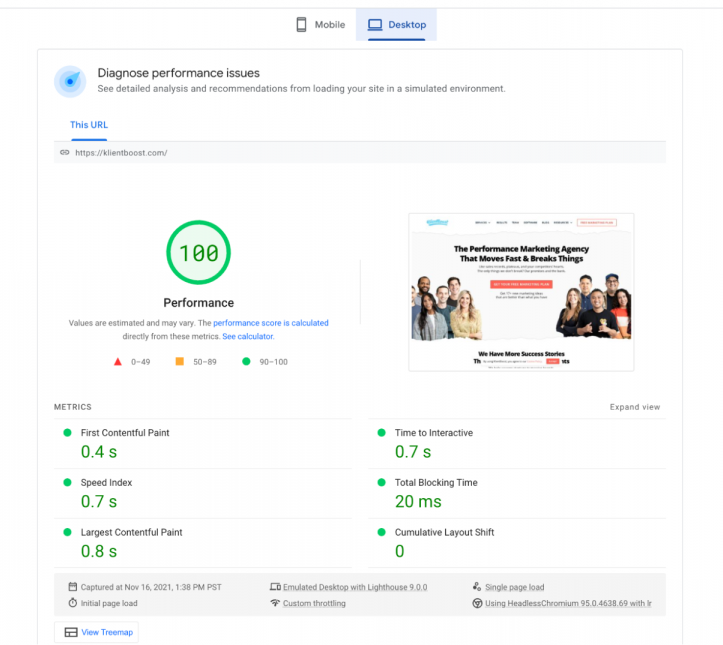

Not sure how fast your page loads? Google has a free page speed checker called PageSpeed Insights.

Increasing page speed might be the most difficult (and technical) task of technical SEO.

Good thing we know exactly what you should do to make your website faster:

- Enable browser caching: Browser caching is when a visitor’s browser stores a version of your website after it loads so it doesn’t need to keep loading it every time someone re-visits a page.

- Compress files: You can compress your HTML, CSS and JavaScript files using GZip data compression. And you can compress your large image files before loading them to your website using standard compression software

- Consider a CDN: Content delivery networks (CDNs) use a combination of servers around the globe, all of which store your website files, to load files from the closest server to the visitor.

- Minify JavaScript and CSS: If you eliminate unnecessary line breaks and spacing within your code, you can speed up the time it takes to parse and download your files. Thankfully, tools exist that will automatically unify your code like CSS Minifier, HTML Minifier, and UglifyJS.

- Limit plugins: WordPress plugins are notorious for bogging down load times. Always delete inactive plugins, and try to limit the amount of plugins you use to begin with.

- Use “next-gen” image formats: For many websites, slow page speed boils down to massive, web-unfriendly image formats. Considering adopting “next-gen” image formats like WebP (created by Google) or JPEG XR (created by Microsoft). Both are optimized for performance.

- Use asynchronous loading: Asynchronous loading means that web files can load simultaneously (and quicker), not one after the other.

For a full list of Google’s page speed recommendations, check here.

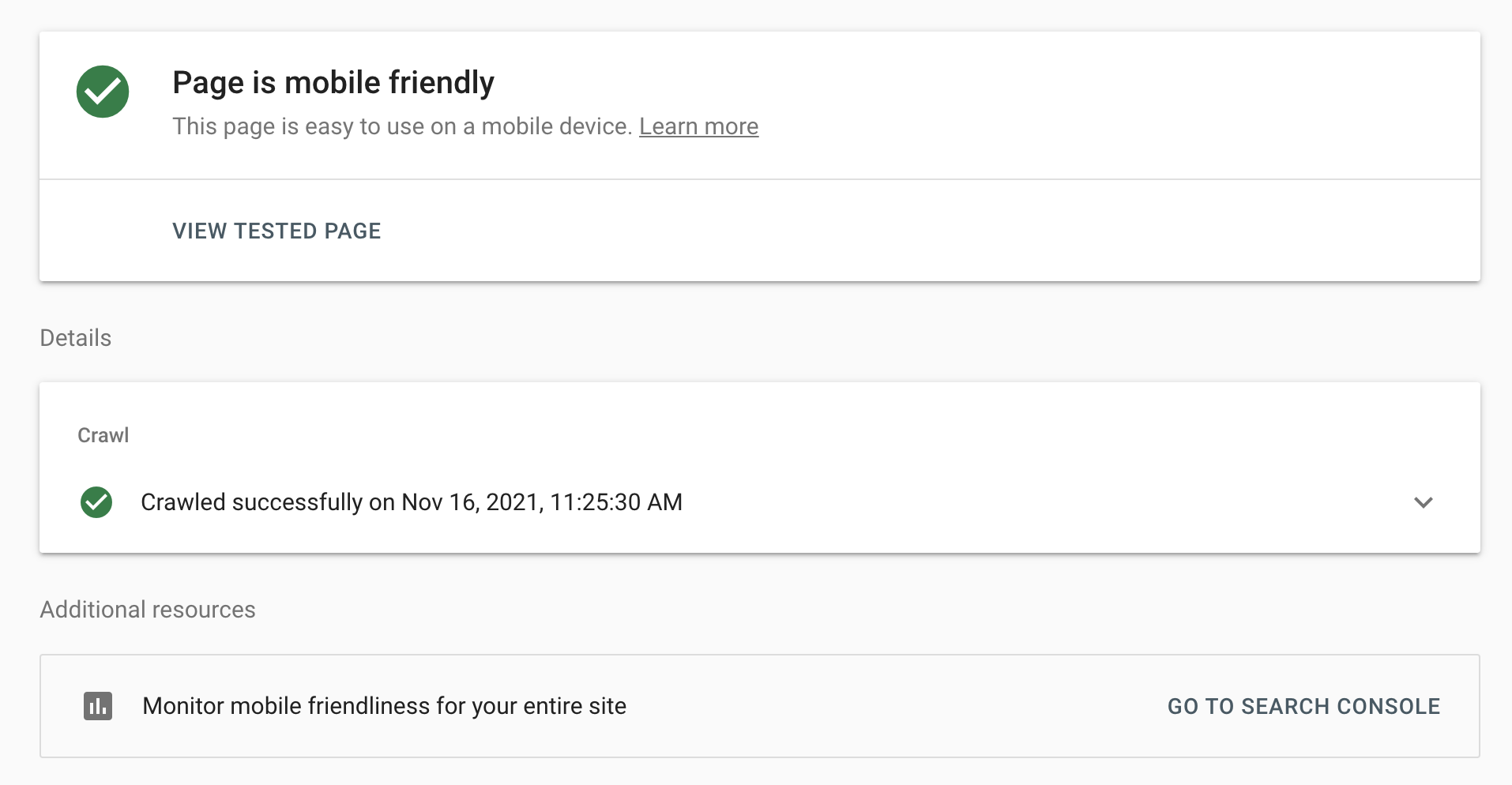

Mobile friendliness

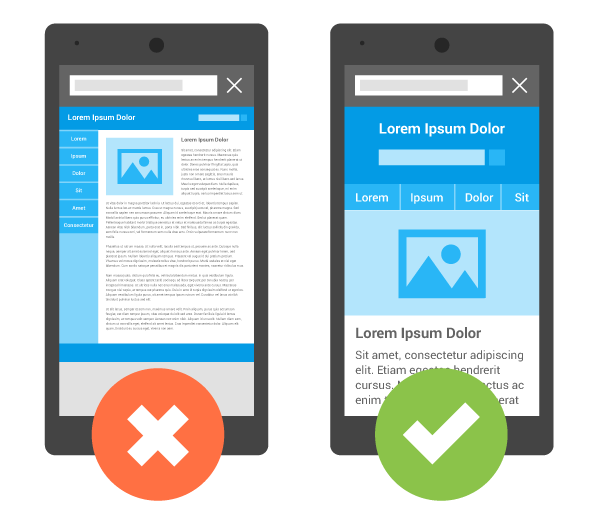

A mobile-friendly website (or “mobile responsive website) is a website that is just as easy to load, view and navigate on a mobile or tablet device as it is on a desktop.

Why is mobile-friendly so important?

Over 50% of search queries now take place on a mobile device. And according to Google, mobile-friendliness is now a ranking factor. Which means that mobile-friendly sites will rank higher than non-mobile-friendly sites (all other things being equal).

Attributes of a mobile-friendly website:

Make it responsive: Instead of using mobile URLs (e.g. m.example.com), keep mobile versions on the same URLs and use breakpoints to adjust the dimensions and layout as the screen resolution gets smaller. (Google still supports mobile URLs, but they don’t recommend it.)

Keep it fast: Compress large image files, keep custom fonts to a minimum, consider AMP pages (accelerate mobile pages), and minify your code. Less is more when it comes to mobile.

Remove pop-ups: Pop-ups annoy visitors on a big screen, but on a tiny mobile device, it can feel like someone is smacking you in the face.

Make buttons large: Mobile visitors scroll with their thumbs, not a mouse. Make sure big thumbs can hit buttons by making them bigger and including plenty of white space around them.

Use large font size: Never use any font smaller than 15px; it’s too small to read on a mobile device.

Keep it simple: In many cases, you’ll need to remove sections for a mobile viewport, otherwise the tiny screen may be too cluttered with information. Trim where you can, keep what’s important.

Last, use Google’s mobile-friendly testing tool to see if your site passes.

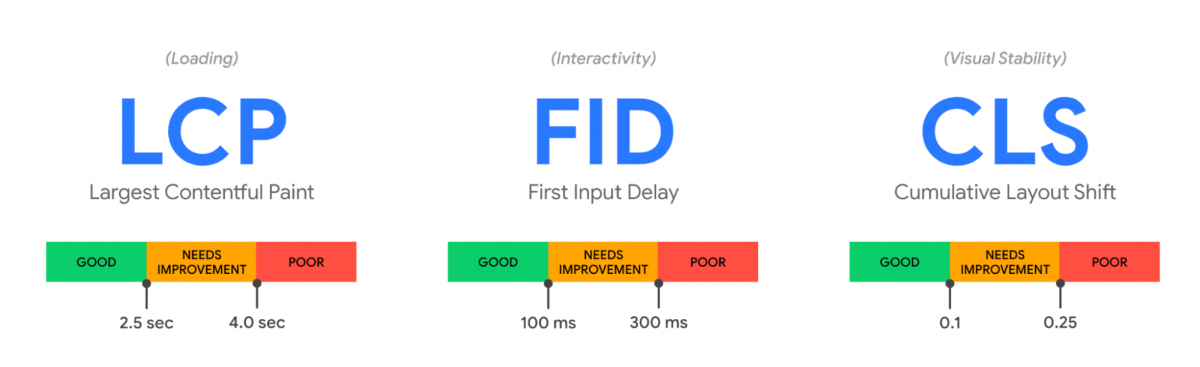

Core Web Vitals

In short, Core Web Vitals refers to the three elements (all technical) Google believes every website with a quality user experience should include:

- Fast loading (LCP): The time it takes to load the largest element of a web page should be no longer than 2.5 seconds

- Interaction with website (FID): The time it takes for your website to respond after a visitor interacts with it (i.e. hits a button, loads javascript, etc.) should be 100ms or less

- Stable visuals (CLS): You should limit the amount of content shifts altogether (i.e. when sections of content actually move on the screen because other elements like advertisement blocks get loaded later). Any score higher than 0.1 needs improvement (scoring of CLS is not in seconds)

We know—there’s a lot to unpack here. Head over to our UX for SEO article to learn more.

Technical SEO parting thoughts

Now that you know which responsibilities fall within the technical SEO wheelhouse, you can start auditing your website’s technical foundation in search of opportunities to fix.

Good news: we wrote an entire article that covers how to perform a technical SEO audit.

In it, we’ll go over the different SEO tools you can use to perform your site audit, like Google Search Console, Bing Webmaster Tools, Ahrefs, SEMRush, and many more.

See you there!